[WIP]

Technologies: AWS, Pulumi, serverless, React, LLMs

My Protege was an web-based AI platform that I built and ran from 2021-2024 with my co-founder. Its unique offering was that it allowed anyone to train an AI-powered chatbot through a normal conversation, no document ingestion required. This trained chatbot could then be shared with others.

Users received a vanity subdomain that served a branded PWA to their chatbot. Their customers could then install on their phones to access when need it.

I built all of the tech, from the infrastructure, to the backend, to the multiple frontends (one for my customers, and one for their customers), as well as the internal libraries to handle the proprietary knowledge-graph database, and the fast CI/CD deployment system.

Everything was 1-merge deploy, with isolated QA environments for feature testing.

Ultimately the project did not grow to a sustainable business, but it was an invaluable learning experience in managing all aspects of the tech.

Infrastructure

Infrastructure gets complicated quickly, is expensive to lose, and error-prone to rebuild. Starting from a solid infrastructure foundation allows an engineer to build quickly and confidently.

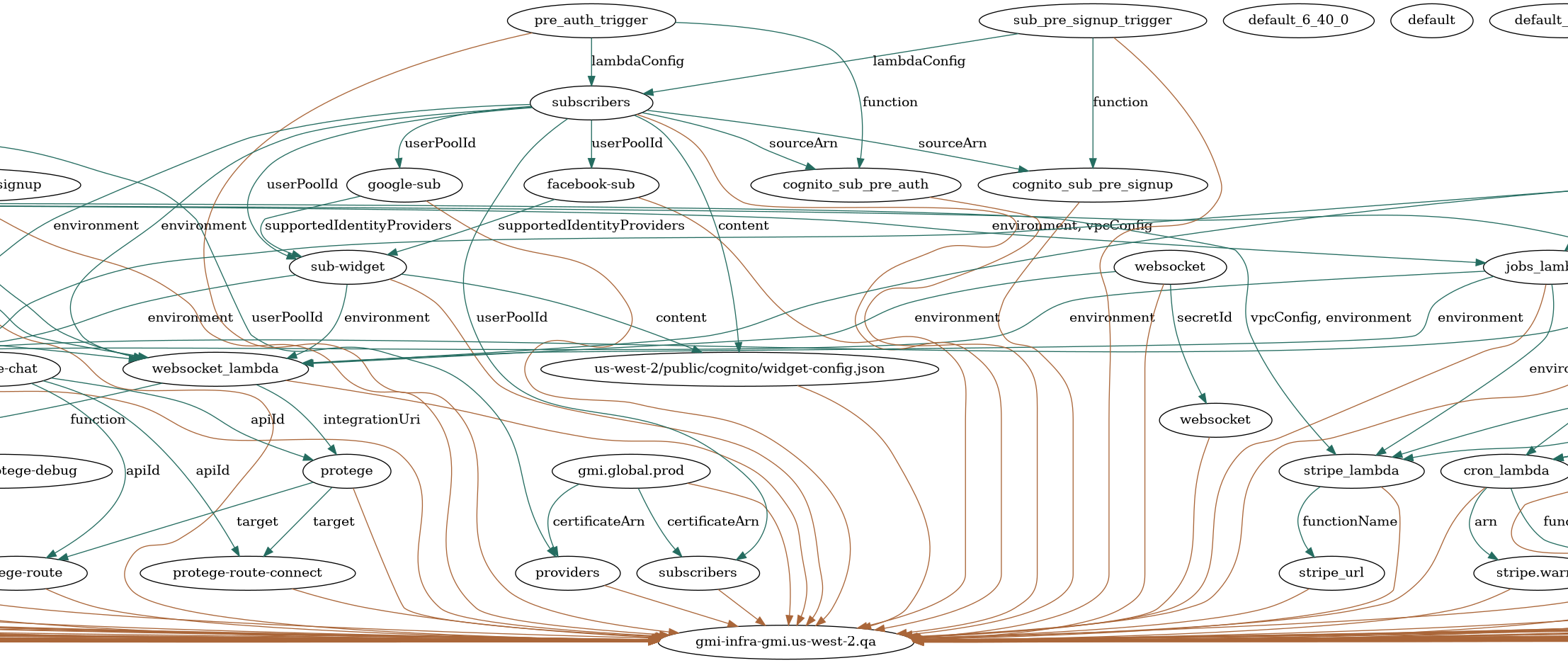

I managed My Protege’s infrastructure as code (IaC) with Pulumi. Coming from a Terraform-heavy background, I used this green field project as an opportunity to get familiar with Pulumi to learn its strengths and weaknesses relative to my existing knowledge.

I designed the infrastructure along two dimensions: environment (eg: prod/qa) and region (eg: us-west-2). Although I was only deployed in a single region, having the region dimension baked in early was a low-effort, high-payoff decision should I ever need to change regions or become multi-region.

I leveraged safe practices like using a IAM-secured, versioned S3 bucket for the Pulumi state backend, ensuring that any changes could only be performed by authorized users/roles, and could be rolled back if necessary. Infra secrets were encrypted at rest and managed through IAM user-linked KMS keys.

Admin Frontend

The My Protege admin frontend allowed experts to train their AI simply by answering questions that the AI asked. The AI then unpacked “facts” from the answer, selected a fact to expand, and repeated the process by asking the expert a followup question.

The admin frontend was a sleek Flutter-based PWA that was snappy and looked consistent on iOS, Android, and the desktop browser. I designed and built several custom widgets, including the training widget (under the “Train” tab) and chat widget (under the “Test” tab). These widgets did the heavy-lifting of letting the expert have a tight feedback loop between training the AI and then quickly testing it.

I also employed industry best practices for FE, like using redux for clear state management, and a router-based component for unified navigation. Login was OAuth (Google and Facebook IdP, through Cognito).

API Backend

My Protege’s API backend is a Python-based Docker image, hosted on AWS Lambda (serverless) for cost-effective autoscaling. Having managed K8s clusters, as well as